For nearly a year, I’ve been deliberating the relocation of my home server to new hardware, primarily because I’m still in the process of determining the optimal software environment for my requirements. Experimenting with various configurations on the “new” hardware, my recent endeavor involved comparing LVM+Ext4 and ZFS file systems to determine which aligns best with my tasks. Currently, my server operates on LVM with Ext4 partitions, but I’ve been contemplating a transition to ZFS, considering its array of features and advantages.

Instead of setting up physical servers, I opted for Proxmox and created three VMs: one running Ubuntu Server with a secondary hard drive utilizing LVM+Ext4, another with ZFS, and a Debian desktop VM for supplementary testing. Another reason for using Proxmox is that I’m considering converting my physical file server to the virtual one, most likely with Proxmox (keeping in mind VMWare ESXi as an option).

The hardware configuration for the VMs was standardized: CPU type — host (1 socket, 4 cores), 8 GB of RAM, with all other settings set to default values.

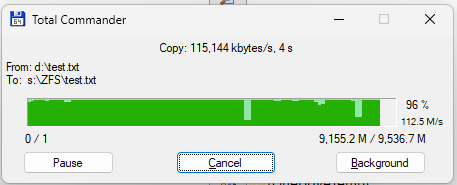

For copying files from a Windows machine, I utilized the latest version of Total Commander due to its convenient display of copy speed charts in the progress bar, along with numeric speed values. Similarly, in the Debian VM, I employed the standard Dolphin file manager, which also provides copy speed information.

While the copying speed from Windows remained relatively stable throughout the tests, the copying speed from Debian exhibited variability, prompting me to rely on test data size and copying time to calculate the average transfer speed for test groups 2–5.

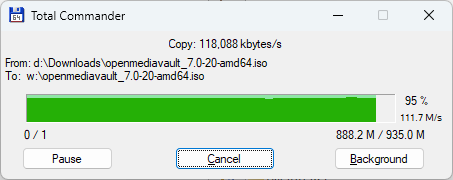

Test Group 1: Transferring a large file from the physical Windows desktop

Test 1.1: Transferring a large file to the physical server.

The Windows 11 Pro desktop and the physical Ubuntu server are linked via the same wired 1 Gbit network. The peak copy speed reaches approximately 118 MBytes per second, primarily constrained by the speed limitations of the network infrastructure.

Test 1.2: Transferring a large file to the virtual server with LVM+Ext4 storage.

The copying speed in this scenario mirrors that of Test 1.1. Therefore, in the case of a 1 Gbit network, whether the file server is physical or virtual makes no difference. Perhaps, with a 10 Gbit network, copying to the virtual server could potentially be slower than to a physical one, although I currently lack the opportunity to verify this assumption.

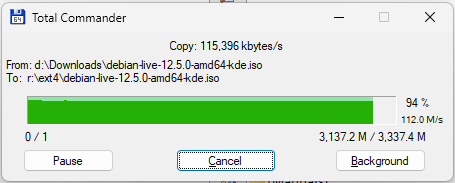

Test 1.3: Transferring a large file to the virtual server with ZFS storage.

The situation changes when copying to the ZFS storage server, presenting a more intricate scenario. Although the peak speed remains consistent at around 115 MBytes/sec, a series of three speed drops (with another occurring while I captured the screenshot) raise questions. To address this issue, I delved deeper into how ZFS operates, scouring forums and manuals for solutions. It turns out, the problem is a common one experienced by many users. Despite attempting various adjustments such as caching and mirroring configurations, none proved effective. However, I discovered that ZFS utilizes RAM as a cache for its operations, periodically necessitating the flushing of this cache to the disk. This phenomenon elucidates the speed drops — ZFS becomes preoccupied with flushing its cache to the disk. Furthermore, my hypothesis was corroborated when I augmented the RAM of the ZFS VM to 16 GB. This delayed the occurrence of speed drops, as the server could allocate more RAM for ZFS caching:

Test Group 2: Transferring a large file from the virtual desktop

My aim with the second test group was to evaluate the speed at which the VMs could transmit data within their virtual network. To achieve this, I set up a Debian Desktop VM in Proxmox and initiated the transfer of a large file to the virtual servers.

Test 2.1: Copying a large file to the virtual server with LVM+Ext4 storage.

The transfer commenced at a speed of approximately 250 MBytes/sec before gradually decreasing. The lowest speed recorded was around 150 MBytes/sec, with an average speed of about 180 MBytes/sec.

Test 2.2: Copying a large file to the virtual server with ZFS storage.

Initially, Dolphin reported a peak speed of 380 MBytes/s, which seemed unrealistic and likely a result of caching. However, numerous speed drops occurred during the transfer, more frequent than observed in Test 1.3. At times, speeds dropped to 0 MBytes/s, leading to error messages such as “Could not write to file.” It was perplexing to witness such poor data transfer performance between two Linux systems due to the ZFS file system. Even after setting up another Ubuntu server with ZFS, the same issues persisted. Increasing the server’s RAM to 16GB did not alleviate the problem, unlike in Test 1.3 where speed drops occurred later in the copying process.

Test Group 3: Repeating Test Group 2 with Multiqueue and Multi Channel options On and Off

A user on a discussion board raised a question regarding achieving 10 Gb speed between virtual hosts in Proxmox and physical machines linked to a 10 Gb wired network. Despite being connected to such a high-speed network, they were only able to attain a peak throughput of 1 Gb/s. Subsequently, someone suggested adjusting the Multiqueue parameter for the VMs’ network cards, setting it to 4. This modification enabled them to achieve a notable improvement, reaching speeds of up to 2.5 Gb/s in VM-to-physical network interactions. Additionally, I discovered another method to enhance network transfers, which involved enabling Multi Channel for Samba.

I refrained from testing copying to the ZFS server due to consistent traffic drops, rendering further tests impractical. Despite researching extensively and attempting various recommendations, such as adjusting caching, mirroring, and other settings, I encountered no improvement. While I acknowledge the widespread assertion that setting up ZFS is simpler compared to LVM+Ext4, which I concur with, troubleshooting ZFS proved to be more challenging than anticipated. Despite my appreciation for the benefits offered by ZFS, I decided to postpone its implementation until a more opportune time and discontinue tests with the ZFS VM and focus on Ext4 in Test Groups 3 and 4.

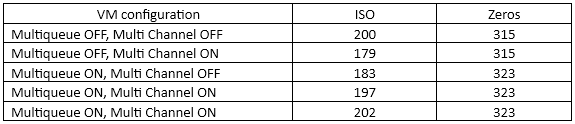

Here are the results for copying to the Ext4 virtual server (in MBytes/s):

Zeros refers to a file generated using the fallocate command, where reading from such a file typically yields zeroes. Meanwhile, an ISO file denotes a Debian ISO installation file.

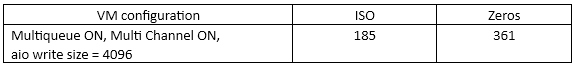

I came across an opinion suggesting adjusting a Samba parameter, aio write size, to 4096 instead of the commonly recommended 1. Here’s how this adjustment impacted the test results:

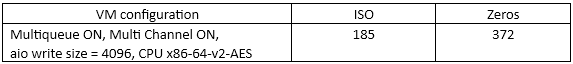

Additionally, I made another configuration change: setting the processor type to x86–64-v2-AES, the default setting for all VMs created in Proxmox, on both the Ext4 server and Debian desktop VMs:

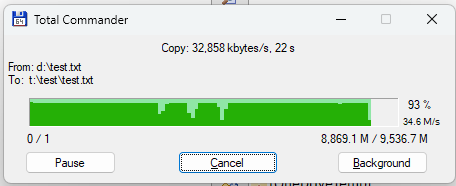

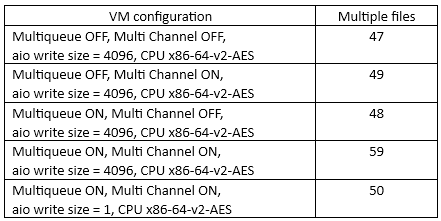

Test Group 4. I conducted a series of tests to measure the copying speed between the Debian desktop VM and the virtual Ext4 server with the dataset comprised 14,139 files of varying sizes, totaling 6.8 GB. Here are the results:

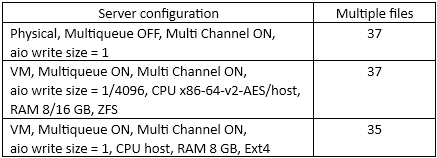

Test Group 5. I returned to the physical Windows 11 desktop and ZFS virtual server to evaluate how quickly they could copy multiple files of different sizes to both physical and virtual servers.

Surprisingly, virtual servers with ZFS experienced either no speed drops or only a single brief drop. The amount of RAM, CPU types, and aio write size settings did not significantly impact the copying process. It appears that ZFS may efficiently manage cache-to-disk transfers for multiple small files compared to a single large file.

In summary, while copying zeros yielded faster results, this test scenario may not fully represent real-world scenarios. For ISO results, it’s noteworthy that enabling Multiqueue OFF and Multi Channel OFF yielded unexpectedly improved performance compared to having either one enabled. Increasing the aio write size parameter did not notably benefit real files but did enhance copying zeros. Similarly, changing the CPU type did not significantly impact the results for non-zero files. Test Groups 4 and 5 provide a more accurate representation of transfer speeds in typical working situations compared to synthetic tests involving zeros and single large files like ISO images.